Craft your own Generative AI Chatbot with Amazon Bedrock with Theo Lebrun

At Devnexus, Theo Lebrun, Data Engineer and Technology Consultant at Ippon Technologies, delivered a hands-on guide to building generative AI chatbots with Amazon Bedrock. He began by situating generative AI in today’s landscape, noting the growing ecosystem of models such as Llama, Titan, and Claude. A central technique he emphasized is Retrieval Augmented Generation (RAG), which enhances chatbot accuracy by incorporating an organization’s private data—like internal documents or databases—into LLM prompts. This approach allows companies to build AI solutions tailored to real business needs without the costly overhead of retraining models.

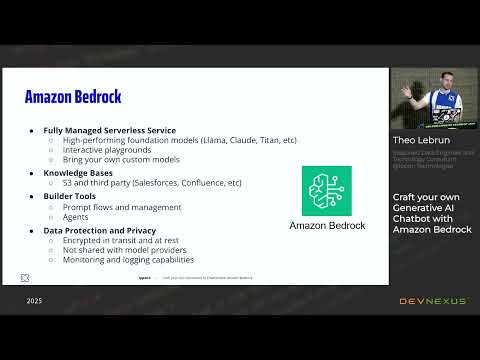

Lebrun then explored Amazon Bedrock, a fully managed, serverless platform for hosting foundation models and simplifying generative AI application development. Bedrock provides a playground for experimentation, options for hosting custom models, and seamless integration with AWS knowledge bases such as S3, Salesforce, or Confluence. These integrations allow organizations to inject proprietary data into chatbots securely. Lebrun highlighted Bedrock’s strong data protection measures: prompts are encrypted, never shared with model providers, and inference runs on local model copies. Combined with AWS’s monitoring and logging capabilities, this makes Bedrock a secure and scalable choice for enterprises pursuing AI initiatives.

The presentation also covered the practical development workflow of chatbot projects. Lebrun explained how to select frameworks like LangChain or LlamaIndex, choose vector databases for embedding storage, and implement effective data preparation strategies. Key considerations include identifying reliable sources, setting update frequencies, converting documents, and applying intelligent chunking for optimal RAG performance. He showcased an internal chatbot built at Pon Technologies that can answer questions from resumes, case studies, and internal documentation—demonstrating the concrete value of these systems in day-to-day operations. Lebrun closed by stressing the importance of guardrails, monitoring, and feedback mechanisms, as well as tips like including timestamps and conversation history in prompts to boost reliability and user satisfaction.

Lebrun’s talk makes clear that enterprise-ready AI chatbots require more than just powerful LLMs—they demand thoughtful integration of private data, secure infrastructure, and best practices in design and monitoring. With tools like Amazon Bedrock and RAG, developers can go beyond generic chatbots to build scalable, secure, and highly accurate AI assistants tailored to their organization’s needs.

Join Us at Devnexus 2026

Talks like this are just a glimpse of the insights shared at Devnexus. Join us next year to explore the tools, frameworks, and ideas shaping the future of software—and be part of a community pushing technology forward.

👉 Learn more at devnexus.com